I’ve got a ticket to DRY

All programmers tend to agree that repeating yourself [in code] should be avoided. This is commonly known by the acronym DRY.

After looking at some demos of a search engine today a light bulb lit up in my head [not an energy effecient one I’m afraid]. The interface to the search allowed you to select from different categories and the result was dynamically updated. Nothing remarkable in that but wouldn’t it be great if we could search our code this way? And I’m not talking about the ability to find something in just your project. What if you could search the entire enterprise code based on filters for method names, return types etc right within the IDE. Every time you write a new method or class you could quickly find similar pieces of code within the enterprise. Now this may lead to some time pressured / lesser experienced developers to copy and paste more, but for me it would identify areas where it makes sense to provide libraries / services based on the actual coding needs of the developers. It would also help target problem areas across different projects. I may try and knock up a proof of concept in .NET using something like Lucene as the search engine. Not sure how this might work just yet, but it would either examine the source itself or use static analysis, something like cecil and the approach I’ve talked about before. Does anybody else think this is a good idea? What should the filters be?

Quality Radar

Now I’m back on the blogwagon, I though I’d let you know about one of the things I’ve been doing over the last couple of months.

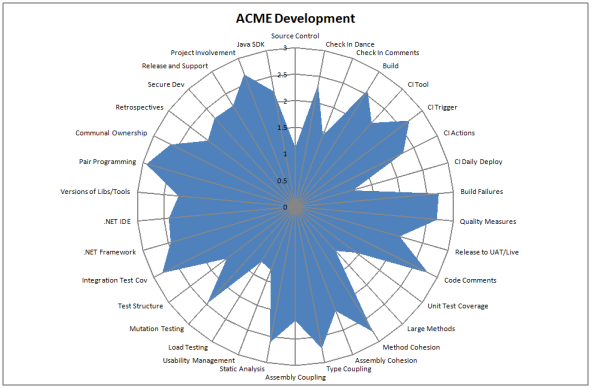

As useful as standards and guidelines are they are very much based in the here and now. What is missing is a statement of the goals for your development practices over the next year or two. Where do you and your organisation want to be over this time frame? To that end I created a mulitple choice survey with each question having 4 answers. The questions used the following scoring system

- 0 = The practice, tool or what-not mentioned in the question is not used / followed

- 1 = It is used / followed, but in an obsolete manner

- 2 = It is used / followed according to current standards

- 3 = It is used / followed according to our future goals

The questions fitted into five broad categories

- Configuration Management

- Tools

- Practices

- Code Quality

- Testing

If you take the averages across all of your development teams you can chart it like so:

At a glance you can tell whether standards are being meet, software quality aspirations are being achieved or that certain aspects of your development practices are below par. The more of the circle that is filled, the better you are at acheiving your goals. When you get scores mainly falling between two and three it is time to raise the bar.

This information is useful in a couple of ways. If you plot the scores in ascending order you can tell what thinds need to improve and spend your effort on them, based on their priority. You can group this info into categories to ascertain if you have any problem categories.

You can also plot individual teams against the average. This enables you to hightlight to all devs what a particular team is good at. It also lets teams know where to get help from other teams who have a higher score for that particular practice.

If you re-run every quarter or so, you can evaluate how the organisation has changed, identifying what worked and what didn’t. It’s basically a retrospective at a higher organisational level.

Design Patterns Study Group

We’ve been running a weekly lunchtime session for interested developers on design patterns. I suggest that you do the same, as we have found it very valuable in coming to a common understanding of what each pattern is about, when you might use it and real world examples in our current code.

Somebody made the point today that we seem to have the most debate about what we perceive to be the mostly widely used and understood [particularly creational] patterns. This for me highlights the importance of running these sessions. We use something like the approach outlined here, but announce which pattern/s we will be looking at next week based on what is related / of interest / controversial.

Software Craftsmanship

I’m helping to organise the Software Craftmanship 2009 conference to be held at the BBC Worldwide offices in West London on the 26th Feb 2009.

I’m a member of the selection panel and requests for proposals are now open. If you would like to do an interactive session with a bunch of passionate developers may I suggest that you propose a session as soon as you can. Jason has done a great job in assembling the selection panel which contains many leading lights in the world of software.

Registration for the conference opens on the 1st December.

Quality in the Real World

We have been producing code quality dashboards at work. They are produced by our CI server whnever a successful build is produced. This allows developers to get quick feedback on the quality of code they have just commited. We can compare projects and raise their quality over time. This is all very well and good but wouldn’t it be nice to see how we are doing compared to the outside world?

So without further aso I present to you 4 code quality dashboards for a selection of .NET open source projects [and one MS one!]

As you can see quality is variable with nmock and ASP.NET MVC doing well. Fifty percent unit test coverage for nunit is a bit shocking however!

The Ultimate Code Smell

Bob Martin has been thinking about adding a new element to the agile manifesto around producing quality rather than quantity. He’s described this as ‘Craftmanship over Execution’. To back this up you can follow the instructions here and measure the amount of WTF’s per minute. A great idea for a metric, but hard to automate. Maybe an idea for a new startup; provide metrics for code in the same way third party companies perform penatration testing.

Quality Testing

As part of a continuous integration cycle most people consider running unit and integration tests. Some even consider running automated acceptance tests. Fewer still focus on code quality tests. To ensure code is maintainable requires a certain amount of effort as the code changes. I think this is what the refactor stage of the TDD red, green, refactor cycle alludes to. As well as refactoring code to remove duplication, there are other considerations to be made with regards to maintainability. We use six indicators to give a finger in the air estimate of the maintainability of a code base. The indicators we use are as follows:

Unit Test Coverage High test coverage is a good indicator of whether a TDD approach is being followed, and if not an optimistic percentage of the chance of a bug being caught. Said another way, If a bug is introduced into the code the chance of it being caught is at best the percentage of code covered by tests. This very much depends on the quality of the tests, but if you only have fifty percent coverage and introduce a bug, it’s a coin toss whether it’s detected. If the tests are poor the real figure is much lower than fifty percent.

Percentage of large methods Fairly obvious this one, but large methods are harder to maintain because they contain more code. There is more scope for error, less accuracy for identifying the cause of any error [any unit test covers more code] and a greater chance that the method is breaking the single responsibility principle giving it more than one reason to change. What you consider a large method is up to you, but we have been using ten lines of code as our measure.

Class Cohesion For a class to be cohesive all methods should use all fields. We use the lack of cohesion of methods henderson-sellers formula to measure this one. If a class isn’t cohesive it’s an indicator that unrelated functionality could be split into it’s own class. In other words it has more than one reason to change and is therefore breaking the single responsibility principle.

Package Cohesion For a package or assembly to be cohesive the classes inside the package should be strongly related. This is a measure of the average number of type relationships within a package. Low cohesion suggests that the types can be split into seperate packages.

Class Coupling This is a measure of the number of types that depend on a particular type a.k.a. afferent coupling. If a high number of types depend on the class in question, making changes to it will be hard without breaking lots of client code. There are a number of reasons why this might occur. Responsibility for one aspect may be split among multiple classes, but more likely you don’t have a losely coupled design.

Package Coupling This is a measure of the number of types outside this package or assembly that depend upon types within this package. One possible reason for high coupling is a packaging problem – things that change together should stay together. Another reason is that the packages in question have many responsibilities.

I’d love to hear feedback on the way you measure the maintability of code.

Refactoring Made Easier

JetBrains TeamCity is a build management and continuous integration platform which supports .NET and Java. Having set up TeamCity and played with it for a couple of weeks, I’m very impressed by the slick UI and features provided out of the box. These include a set of code quality features. Even better is the fact that the professional version is free.

One of the things that really impressed me is the duplicates finder. As the name suggests it detects duplicate code and currently works with Java, C# [up to 2.0] and VB [up to 8.0]. This helps you target the areas that need refactoring.

Alongside the duplicates [Java example above] a ‘cost’ is calculated. I’m not sure of the algorithm used, but it seems fairly sensible and the cost has some relation to the amount of code that is repeated. You can use this to help prioritise your refactoring. To setup your build simply set the runner to be the duplicates finder. Continue reading

Consistency Is Key

I’m sure I am not the only one who puzzles over Microsoft’s naming conventions for C#. I understand the Pascal and camel casing rules, but what I don’t get is the exception made for two letter acronyms. I’m looking at creating a dictionary of my code so that I can start to see patterns and opportunities for re-use. To do this I need to be able to break up the name of a method or class into its constituent parts. Regular expressions seemed like a good way to go. It is fairly trivial to write a regex that matches the parts that make up a Pascal and camel cased identifier. What makes this tricky is the exception for short acronyms. Here is my attempt:

Regex splitName = new Regex(@”^I(?=(\p{Lu}{2})*(\p{Lu}\p{Ll}|$))|(^\p{Ll}+|\p{Lu}\p{Ll}+|\p{Lu}{2})”);

It breaks down like so: Continue reading

The Chicken Dance

Well, that’s what it sounds like when my antipodean friends talk about the process of checking in code. Seriously though, development is a complex beast as you realise when you start talking in detail about any of the constituent tasks that a developer is responsible for. Take developers testing responsibilities as an example [which I conveniently ran a workshop on last week]. These break down into the two broad areas of verification and validation.

A workshop to figure these things out is a very useful tool and one that I would recommend at your workplace. It is truly incredible the depth of coverage you can get in a topic with ten people sitting around the table. We spent 5-10 minutes where everybody wrote down each responsibility or task they could think of on a separate post-it. We went through a whole pack of post-it notes [admittedly with a bit of duplication]. We then took it in turns to stick the post-its on the board, roughly grouping and recognising duplicates as we did so. I’m currently busy writing these up for our internal wiki. Making developers aware of the broad range of testing tasks and supporting this with automation of acceptance tests, unit tests etc. will really help improve the quality of the code delivered to the QA teams and eventually the customer. Ideally everybody will pick up on this and you will end up with a zero defect culture.